Use local files to buffer data and overcome connectivity issues

When uploading data from a location where the connection is not stable, you can use the integrated queue mechanism to overcome downtime and buffer data in memory. This however, has its limitations and comes with the consequence of increased memory usage. In situations where this is not acceptable, or the downtime to overcome is too long, it is recommended to store the payload first in local files and then upload it with another flow.

This concept can be applied not only to uploading data in a batch fashion but also to stream the stored data to eternal streaming services like the Azure Iot Hub, Kafka and so on.

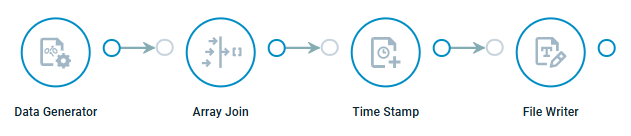

First flow:

- Capture data from source

- Join messages over time window or message count (to avoid creating one file per flow-message)

- Create unique filename (in this case timestamp)

- Save the file locally

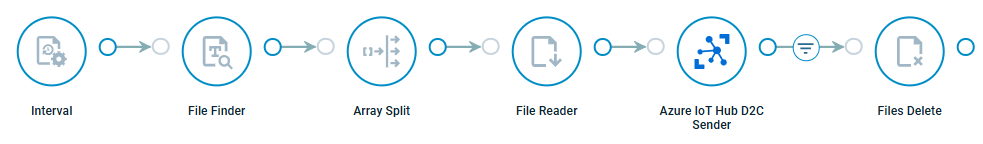

Second Flow:

- Use interval or scheduler to trigger the flow

- Find files in folder

- Split file-list into single files

- Read payload Send data to external service

- Delete file if upload successful

- Disable queues

Note: By disabling queues in the second flow you prevent the flow from processing the same file twice. This could in theory happen if the next iteration is triggered when the previous one is not finished.