The Parquet Writer Module

The Parquet Writer module is designed to create files from incoming arrays. Due to its efficient compression, parquet is a commonly used format for saving large chunks of data and uploading the created files to external systems, such as AWS S3, Azure DataLake and many more. The module will detect the schema to use based on all objects within the incoming array and the datatype per column based on the first object. By default the module assumes that all rows have the exact same datatype, which might not be true every time. To overcome those cases, you can set schema overrides and also ignore values of the wrong data type. You can read more about this in the module documentation.

The filename can either be defined with a base filename + timestamp or picked up from the incoming message using template syntax.

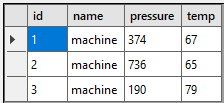

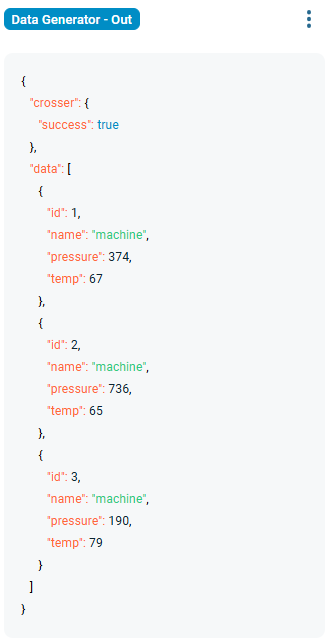

Example input:

Parquet Viewer: